This is an English copy of my last article, which was written in French: Faire du multi-texturing avec VTK.

I believe it will help much more people in English since VTK is used worldwide… And I don’t want to bother with a multilingual WordPress plugin yet. 🙂

In this blog post I will talk of what has been my main research subject during the last two months, during my part-time job.

It was about multi-texture mapping on a 3D polygon, using the open source library VTK. I mean it when I say « research subject » since, at the time I write this, it seems that no one or near already tried to do it with VTK… Although the feature is present, it just doesn’t work out of the box.

This is mainly why it took me so much time to find out how to do it : no documentation at all on WWW, and, as far as I can see, no one who have succeeded already shared his science … My solution came from a C++ test file lost far away in the VTK repository. I come back to it later, first let’s get a little back in context.

Contents

The Visualization ToolKit

Actually, VTK is a pretty big library layering over OpenGL, a free 3D (2D too, actually) image generation API. OpenGL basically being a multi-plateform API, naturally VTK is too, furthermore it’s available in many languages (C++/Python/Java/Tcl-Tk). Given its use by many research institutes or even enterprises integrating it in commercial applications, VTK makes the life of many people around the world who work in 3D imaging easier (those people may not want to have to deal with « pure » OpenGL code).

Briefly, VTK knows how to do a lot of things: cubes, spheres, triangles, or more complex geometrical shapes (like a human body), and more… The toolkit actually manages not only pure geometry but also permits to have some very useful classes to easily interface it with the standard 3D imagery file formats (and imagery itself). I, for example, found the VRML, OBJ or JPEG reader classes very useful. In the real world of 3D imaging, it is quite usual to generate shapes from photographs in an application, and rework or view it in another. That’s why those classes are interesting, since they permit to easily import/export 3D shapes in standard formats widely used by the biggest softwares around.

I profit of the moment to precise that all the following examples have been made with VTK 5.8.0.

Texture mapping

The VTK feature that especially interests us today is the ability to map a (or many) texture(s) on a 3D object. It is usually called « texture » an object, i.e. « cloth » the 3D object with the pixels contained in a 2D picture, by giving the program a conversion way permitting it to understand that « that pixel of that image must be associated to this point in space ». It isn’t very interesting that I re-explain all the theory here since literature on the subject is abundant.

What you should remember is that we use the UV mapping process, which consists in saying that a texture image, whatever its size, is actually an image in which we can move thanks to coordinates going from the bottom left corner, having coordinates (0, 0), to upper right corner, which will have coordinates (1, 1). (If you understood well, the point at the center of the image will always have coordinates: (0.5, 0.5).

VTK handles well simple cases, with one texture image. We’ll see that texture a polygon with many images can be hard… But the goal of this article to demonstrate that it is actually possible !

« Back to Basics » : Monotexture

I won’t stay long on the question since it’s what VTK handles by default. Just to show a working code example. If you want to try at home, I took the Bugs Bunny files available on the Artec3D website. I won’t explain neither how to install VTK, or how it works, there is plenty of documentation on these topics out there on the Web.

Here’s the code to generate a vtkPolyData (a VTK generic data structure, very used when we deal with complex polygons) from both a VRML file (with vtkVRMLImporter) and OBJ file (with vtkOBJReader). If you want to try, remember to comment the method you don’t use.

I’d like to stress that we can’t say these two methods are equivalent: an OBJ file only serves for describing a single 3D object. VRML, which is a kind of programming language on its own, basically has a much wider goal and can describe entire 3D scenes, including animations, etc… But here’s the code 😉

[cc escaped= »true » lang= »cpp »]

void TestSimpleTexturing()

{

std::string iname = « Bugs_Bunny.obj »;

std::string imagename = « Bugs_Bunny_0.jpg »;

// Read the image which will be the texture

std::cout << « Reading image » << imagename << « … » << std::endl;

vtkSmartPointer<vtkJPEGReader> jPEGReader = vtkSmartPointer<vtkJPEGReader>::New();

jPEGReader->SetFileName ( imagename.c_str() );

jPEGReader->Update();

std::cout << « Done » << std::endl;

// Creating the texture

std::cout << « Making a texture out of the image… » << std::endl;

vtkSmartPointer<vtkTexture> texture = vtkSmartPointer<vtkTexture>::New();

texture->SetInputConnection(jPEGReader->GetOutputPort());

std::cout << « Done » << std::endl;

// Import geometry from a VRML file

// DANGER !! Crashes if lines in the files are too long

vtkVRMLImporter *importer=vtkVRMLImporter::New();

std::cout << « Importing VRML file… » << std::endl;

importer->SetFileName(iname.c_str());

importer->Read();

importer->Update();

vtkDataSet *pDataset;

vtkActorCollection *actors = importer->GetRenderer()->GetActors();

actors->InitTraversal();

pDataset = actors->GetNextActor()->GetMapper()->GetInput();

vtkPolyData *polyData = vtkPolyData::SafeDownCast(pDataset);

polyData->Update();

std::cout << « Done » << std::endl;

// Import geometry from an OBJ file

std::cout << « Reading OBJ file » << iname << « … » << std::endl;

vtkOBJReader* reader = vtkOBJReader::New();

reader->SetFileName(iname.c_str());

reader->Update();

vtkPolyData *polyData2 = reader->GetOutput();

std::cout << « Obj reader = » << polyData2->GetNumberOfPoints() << std::endl;

std::cout << « Obj point data = » << polyData2->GetPointData()->GetNumberOfArrays() << std::endl;

std::cout << « Obj point data tuples = » << polyData2->GetPointData()->GetArray(0)->GetNumberOfTuples() << std::endl;

std::cout << « Obj point data compon = » << polyData2->GetPointData()->GetArray(0)->GetNumberOfComponents() << std::endl;

// Renderer

vtkSmartPointer mapper = vtkSmartPointer::New();

mapper->SetInput(polyData2);

vtkSmartPointer<vtkActor> texturedQuad = vtkSmartPointer<vtkActor>::New();

texturedQuad->SetMapper(mapper);

texturedQuad->SetTexture(texture);

// Visualize the textured plane

vtkSmartPointer renderer = vtkSmartPointer::New();

renderer->AddActor(texturedQuad);

renderer->SetBackground(0.2,0.5,0.6); // Background color white

renderer->ResetCamera();

vtkSmartPointerrenderWindow =

vtkSmartPointer::New();

renderWindow->AddRenderer(renderer);

vtkSmartPointerrenderWindowInteractor =

vtkSmartPointer::New();

renderWindowInteractor->SetRenderWindow(renderWindow);

renderWindow->Render();

renderWindowInteractor->Start();

}

[/cc]

Some amusing things:

At first we tried with a really old GPU, and the texture didn’t show well. Instead of the image, there was a kind of totally random mixed checkerboards. I didn’t keep any screenshot but it was very characteristic, so if you have the same problem…

We also quickly moved from the VRML to the OBJ version of Artec’s scanned objects because while opening an Artec VRML file, we figured out that Artec places every polygon’s informations on a single very long line (could go to a few hundreds of thousands colons). Which sadly makes the VTK VRML importer crash, using a yacc/lex-based solution, which, in this case, crashes absolutely silently, only showing an empty window, without mesh). But, even after having separated those very long line in many of a better size, the mesh won’t show up. I personnally think that’s the VTK’s VRML importer that doesn’t handle such large meshes (it is too large for it).

I find it kind of funny that from this ugly texture image:

we can obtain that :

Multiple texture mapping, « multitexturing », or beginning of the serious business

Okay so texture an object with one image is cool, but it happens that for very large meshes, we need to read texture informations from many different images. And that’s where problems begin: I’ll take the example of an OBJ file, since it’s a very simple format. In a classical OBJ file, we find:

- first the space vertices, prefixed by a « v », followed by three space coordinates (x y z);

- texture coordinates points, prefixed by « vt » (for « vertex texture »), followed by two coordinates which, if you follow well, are always between 0 and 1

- faces, prefixed by a « f », followed by the index of the space vertices making the face. Index is just their number, decided by their apparition order in the file. Then it can be variable, given that it depends of the number of points our faces bind together. However, since most of the time it is triangles (in 3D geometry, EVERYTHING is a triangle), we find ourselves with lines like « f 1/1 2/2 3/3 ». The « x/n » notation is used to tell the reading program that it will have to bind the spatial vertex x to the texture vertex (« vt ») number n (same way of notation).

- other stuff, more or less useful. One should read the Wavefront OBJ specification, but that’s all we need to know for the moment.

What does VTK do upon reading such a file ?

Simple: it reads the spatial vertices (normal), builds all the polygon’s faces (ok)… And what happens to the texture coordinates, you might say ? Well, the VTK OBJ Reader simply put them in a list of vertices inside the vtkPolyData that will be produced as the reader’s output.

With one single texture, it doesn’t cause any trouble. Upon displaying the polygon, VTK will look in this list and apply a texture vertex to each polygon’s space vertex (it’s mainly for that reason that it’s important to have exactly the same number of texture coordinates and of spatial vertices).

Problem with this method begin when there is more than one image to apply… Actually there’s a subtility in the OBJ format. It permits to use an external materials file (a .mtl ), that permits to specify different zones, modifications… and, especially, the name of the image file the next texture coordinates will refer to (the « vt » ones, remember ?). To do this the OBJ format uses the usemtl command.

Problem: VTK doesn’t know how to read this instruction. It therefore cannot read that some texture coordinates refer to an image and the next ones to another image. It places all the coordinates in one single list, and cannot tell the difference between the coordinates of an image or another.

Before going further, I precise that for the following examples I use the files available at this address.

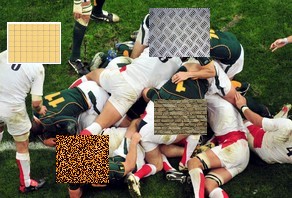

So, when we try to use the « classical » method that I put right up there, we obtain this pretty chaotic result:

Pretty bizarre, isn’t it ?

The result is strange. Half of the body is correctly textured (left side, on the right of the picture), but not the remaining parts. Around, it’s like VTK has used the same image, but not at the right place. And why : because that’s exactly what happens here ! VTK just has a general list of texture points, but as it doesn’t know which coordinates match to which texture, even by setting multiple textures to the actor, it behaves by default, i.e. it textures all the polygon with only one of the textures given to it. This example uses three textures, each used to texture a different polygon zone. If we change the order of the textures we give to it, it will be another zone of the polygon that will be correctly textured.

So how to do ?

It’s the question I’ve been asking myself for a long time… And the answer came to me from a VTK test file, found in the deepest darknesses of their Git repository. The method is a bit more complicated.

Long story short, the goal is to create many « texture units » and tell VTK each unit uses a different image. Besides, we also have to specify a coordinates list to use for each texture.

Let’s come back on the important steps of their test:

[cce lang= »cpp »]

vtkFloatArray *TCoords = vtkFloatArray::New();

TCoords->SetNumberOfComponents(2);

TCoords->Allocate(8);

TCoords->InsertNextTuple2(0.0, 0.0);

TCoords->InsertNextTuple2(0.0, 1.0);

TCoords->InsertNextTuple2(1.0, 0.0);

TCoords->InsertNextTuple2(1.0, 1.0);

TCoords->SetName(« MultTCoords »);

polyData->GetPointData()->AddArray(TCoords);

TCoords->Delete();

[/cce]

They create a texture coordinates list and add it inside the PolyData. Note that they give it a specific name (« MultTCoords »). This list is actually our biggest problem, but I’ll come back to it later.

[cce lang= »cpp »]

vtkTexture * textureRed = vtkTexture::New();

vtkTexture * textureBlue = vtkTexture::New();

vtkTexture * textureGreen = vtkTexture::New();

textureRed->SetInputConnection(imageReaderRed->GetOutputPort());

textureBlue->SetInputConnection(imageReaderBlue->GetOutputPort());

textureGreen->SetInputConnection(imageReaderGreen->GetOutputPort());

// replace the fargments color and then accumulate the textures

// RGBA values.

textureRed->SetBlendingMode(vtkTexture::VTK_TEXTURE_BLENDING_MODE_REPLACE);

textureBlue->SetBlendingMode(vtkTexture::VTK_TEXTURE_BLENDING_MODE_ADD);

textureGreen->SetBlendingMode(vtkTexture::VTK_TEXTURE_BLENDING_MODE_ADD);

[/cce]

After having read images with a JPEGReader and converted image data into texture, they give it a specific blending mode. It’s a very important step, absent in a single texture case, because we have to tell VTK what it has to do when two textures overlap (in our example, each texture image covers a different part of the mesh, so it’s not very important). Also note that the order is important: the texture mapped first must be in REPLACE mode and all the others in ADD mode, otherwise, textures won’t be displayed like it should. There is plenty of informations on what these modes mean, like here.

[cce lang= »cpp »]

vtkActor * actor = vtkActor::New();

vtkOpenGLHardwareSupport * hardware =

vtkOpenGLRenderWindow::SafeDownCast(renWin)->GetHardwareSupport();

bool supported=hardware->GetSupportsMultiTexturing();

int tu=0;

if(supported)

{

tu=hardware->GetNumberOfFixedTextureUnits();

}

if(supported && tu > 2)

{

mapper->MapDataArrayToMultiTextureAttribute(

vtkProperty::VTK_TEXTURE_UNIT_0, « MultTCoords »,

vtkDataObject::FIELD_ASSOCIATION_POINTS);

mapper->MapDataArrayToMultiTextureAttribute(

vtkProperty::VTK_TEXTURE_UNIT_1, « MultTCoords »,

vtkDataObject::FIELD_ASSOCIATION_POINTS);

mapper->MapDataArrayToMultiTextureAttribute(

vtkProperty::VTK_TEXTURE_UNIT_2, « MultTCoords »,

vtkDataObject::FIELD_ASSOCIATION_POINTS);

actor->GetProperty()->SetTexture(vtkProperty::VTK_TEXTURE_UNIT_0,

textureRed);

actor->GetProperty()->SetTexture(vtkProperty::VTK_TEXTURE_UNIT_1,

textureBlue);

actor->GetProperty()->SetTexture(vtkProperty::VTK_TEXTURE_UNIT_2,

textureGreen);

}

else

{

// no multitexturing just show the green texture.

if(supported)

{

textureGreen->SetBlendingMode(

vtkTexture::VTK_TEXTURE_BLENDING_MODE_REPLACE);

}

actor->SetTexture(textureGreen);

}

actor->SetMapper(mapper);

[/cce]

The important part.

They summon a hardware-bound class thanks to the one that is used to define the 3D rendering window, in order to check that the GPU supports multitexturing, and if that’s the case, how many textures can it handle at the same time. The next part is important: it’s really here that they tell VTK that « the texture unit N shall use the coordinates list named « MultTCoords » » and that « the texture unit N matches this image ». If they find that hardware doesn’t support multitexturing, they only display the first texture et voilà.

And that’s pretty much all. All there is to do left is to add the actor to the rendered scene and start the stuff:

[cce lang= »cpp »]

renWin->SetSize(300, 300);

renWin->AddRenderer(renderer);

renderer->SetBackground(1.0, 0.5, 1.0);

renderer->AddActor(actor);

renWin->Render();

int retVal = vtkRegressionTestImage( renWin );

if ( retVal == vtkRegressionTester::DO_INTERACTOR)

{

iren->Start();

}

[/cce]

It may seem easy like that, but it’s because their example is very simple. They eventually can declare a texture coordinates list right into the code, and use the same for all their textures. But when, for example, we import a PolyData from an OBJ file, it doesn’t work this way at all ! Because as I said it previously, VTK will put without any distinction all the texture coordinates it finds in one single list. And their example is made in a way permitting them to use the same coordinates for the three textures, but in real life, it’s never really happening. So, when we would actually need as many lists as textures to use, we have one big absolutely-not-segmented list in which it is impossible to know a priori « who matches who » (the coordinates used for which image go from where to where ?).

A New Solution

It’s at this point that I only saw one solution left: re-read behind VTK the 3D object file (e.g. the OBJ file), and count every time what size is each texture coordinates list. It permits to « delimit » the big list, and to know that, for example, the first 500 coordinates are to associate with the first image.

That’s how I resolved my problem: while re-reading the OBJ file, I created as many lists as there were textures files, and correctly filled these lists. Read again the OBJ file permits to know which images matches which coordinates. However you must not forget to fill the rest of the list with couples of -1.0, because, as explained before, each texture coordinates list is not to apply to all the mesh, but just to a specific portion covered by this texture.

Example given : for a 5000 points mesh, a first list of 500 texture coordinates will need to have a size of 5000 coordinates, with of course the 500 texture coordinates, followed by 4500 couples like (-1.0, -1.0).

On the other hand, if there’s a second list of 500 coordinates (defining a new image), this list will need to be composed of, if you followed well:

- 500 (-1.0, -1.0), because the first 500 points are already textured by the first image

- the 500 coordinates of the new list, the one that matches the second texture image

- finally, 4000 (-1.0, -1.0) again, because this image doesn’t cover the rest of the mesh.

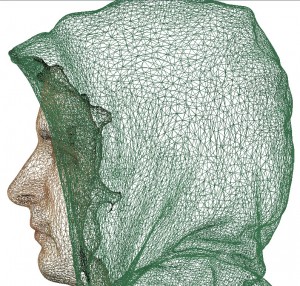

By following this method, we obtain the awaited results:

The solution, a hand-made class: vtkTexturingHelper

OK, so now we know our theory is good, and the examples done directly in the code to verify it work, we must admit that VTK doesn’t handle well multi-texturing, at least by importing from an OBJ file.

Problem is that, even in the case of an OBJ file (with a very simple syntax), the operation to make it work is rather complex.

That’ s why I quickly wrote a small class that aims to ease this process, at least when importing from an OBJ file and using JPEG textures (the case we were in at my internship, in fact). It works mainly with 4 or 5 important functions:

- associateTextureFiles : this function permits to tell the class it will have to use a list of images which name follows a precise syntax. I wrote it with in mind the concrete example of my own experience: on all the Artec3D website examples, the image files are all named like the OBJ file (for example: « sasha »), followed by an underscore, the number of the image (beginning at 0) and the extension. This function permits to precise the « root name » of the images list (in this example: « sasha »), to specify their extension (« .jpg », here) and their number (here, 3). It gives a call like « helper.associateTextureFiles(« sasha », « .jpg », 3); », and it permits to tell the class that it will have to use « 3 JPEG files which names are « sasha_0.jpg », « sasha_1.jpg » and « sasha_2.jpg ». The class will take care of correctly rebuild the filename, and import the image data with the VTK class that fits (here a vtkJPEGReader).

- setGeometryFile : permits to give a file from which VTK will import the mesh geometry. Not very useful, it will change or maybe merge with readGeometryFile

- readGeometryFile : it’s the function that will call the good VTK reader according to the extension of the filename we passed to it before with the setGeometryFile function. If it’s for example a filename like « *.obj », the class will use a vtkOBJReader.

- applyTextures: the function that will do the mapping work of the textures onto the polyData obtained by reading the geometry file. Its principal flaw is that it’s entirely based on the order in which have been given the images filenames to it. If the OBJ file is correctly formatted, i.e. it first uses the first image, e.g. sasha_0.jpg, then the second, sasha_1.jpg, etc… it won’t do no harm, but images imported without any order could prevent it from working well. This should sooner or later be the object of an improvement

- getActor: actually the class has an internal PolyData to retrieve the imported geometrical object, e.g. with the vtkOBJReader, and a vtkActor on which we’re going to map the textures and associate the matching images’ data. This getter permits to easily retrieve the already textured actor, which you only have to add to a vtkRenderer to make it appear on the screen, all the complicated stuff about multitexturing having been cared of by the class.

Although the class is working by now (I used it to generate the working example you’ve seen before), I already see many possible improvements:

- a more intelligent reading of the OBJ file: understanding of the usemtl instruction, reading of the .mtl file and, thus, detection of the texture file used, which would permit to not specify the images files to use (the program would directly go and read it itself). The better way would be to totally replace VTK’s vtkOBJReader class, because when dealing with large meshes, make the VTK reader read the file, and then make the tkTexturingHelper read it again begins to take a non-negligible amount of time.

- add others possible reading sources, for the geometry (VRML, PLY, STL…) and for the image formats (PNG…)

- make the class’ use of file extensions case-insensitive, or use an enum in place of it: easier for everyone

- for the moment, the class internally contains only a PolyData and an Actor. It works pretty well for an OBJ file importation, since the OBJ file is meant to import a single 3D object (see its name…). But since other formats permit to import many objects in one file (VRML does), we should change from a unique instance of PolyData / Actor to a list which we could access via an index.

- a bit more of error handling, maybe exception throwing ?

The class and all its associated files are available on my GitHub repository. If you have a classical installation of VTK, it should work as is.

I would strongly recommend anyone who would be interested to fork and improve this mini-project to do it ! I don’t really work on it anymore (my internship is now over), and I have other projects I work on, so this one may not go much further 😉

See you later froggies !

I’ve been trying to use your code for a while now, but it just doesn’t seem to work. I’m doing something like

vtkTexturingHelper helper = vtkTexturingHelper();

helper.associateTextureFiles(« sax », « .jpg », 4);

helper.readGeometryFile(« sax.obj »);

helper.applyTextures();

vtkActor* TEST = helper.getActor();

But only 1 texture is applied. Am i missing something?

Hello,

did you see the example Gist at this address ?

If not, you may want to reproduce your setup by copying the same code structure. But actually, the way you do it should work. Could you provide all the files you try to work with for me to see if I reproduce the problem ?

Anyway, I’m going to have to investigate some weird things on this.

I just tested it as soon as I’ve seen your message. My typical example case with Artec’s sasha.obj still works fine, but sometimes, the program crashes if I use a different number of textures, deep down in OpenGL code (so I may not be able to easily fix it…).

Unfortunately, this code’s last update is quite old, it’s been a while since the last time I’ve used VTK (so I’m a bit… rusty), and I am really overworked at the moment. So this may take a while. Plus, the helper works the way it works after much empirical try and error. There may be a better approach of the problem that I’m not aware of. Let’s remember we’re trying to do something not officially supported by VTK here, hence the frustrating lack of documentation on the subject.

Also, you could open an issue on the project’s Github in case someone has the same problem.

Hey Alexandre!

Good job on the article, it helped me getting started on my work:

I work at Kitware (creators of CMake and VTK), and I just started looking into adding support for multi-textures in VTK 7.0, using OpenGL2 backend (version > 2.1). In that scenario, nothing is actually implemented, neither MapDataArrayToMultiTextureAttribute to be able to use multiple texture coordinates, nor support for blending multiple textures in vtkPolyDataMapper (it takes the first texture and ignore the following).

I worked on all this and for now :

1) Updated the vtkOBJReader to be able to populate different sets of texture coordinates, filling the empty spaces with (-1,-1)

2) Updated vtkPolyDataMapper to allow for blending of multiple textures in the fragment shader.

Before I work on implementing MapDataArrayToMultiTextureAttribute to use multiple texture coordinates, I wanted to think ahead of the way textures are wrapped by default. With the current implementation in VTK 7, the texture wrapping mode is set to GL_REPEAT, but this behavior adds color to the (-1,-1) texture coordinates, and I guess that’s not good to blend our textures in ADD mode. I believe the good wrapping method should be GL_CLAMP_TO_BORDER to keep all those points black.

Did you need to do anything to adapt the texture wrapping mode? Do you know if it used to default to GL_CLAMP_TO_BORDER? Or if that did not matter to blend them the way you did?

Thanks!

Hello,

I’m sorry I can’t be of great help.

As far as I remember I actually went through a lot of trial and error whan developing the vtkTextureHelper but in the end I managed to use the VTK multitexturing code nearly out of the box. So that isn’t really a problem that I’ve faced.

Plus it’s been a while since I last worked with VTK. My knowledge may be a bit… obsolete. I wish I could be of better help 🙁

hi,

thanks for the awesome information on your blog.

i was just wondering how could simulation a simple machining process using vtk?

like a whole being created

regards

Shayan